Branding and Reputation, Competitiveness and Innovation, Knowledge Strategy, Organization and Management, Security and Privacy

Knowledge governance in the age of AI

Governance: what is it good for?

I’ve recently had the good fortune to observe a lot of the enlightening programming by Paul Washington and his team at The Conference Board’s ESG Center. It’s clear to me that, while “E” environmental and “S” social goals are ones that most of us can understand and relate to, “G” governance is more esoteric. In the enterprise world it concerns issues like board membership, proxy voting, executive structure, and the like — things of interest mostly to corporate lawyers and activist shareholders.

IBM defines enterprise governance as “the set of processes and practices by which you align with your strategic objectives, assess and manage risk, and ensure that your company’s resources are used responsibly.” By that definition, it’s both quite broad and hugely impactful on how enterprises operate.

Governance is an unwieldy term that seems out of place in our age of personal freedom and entrepreneurialism. Few of us welcome the idea of being “governed” by anyone else, with its additional implied burdens of regulation and compliance.

But self-governance is essential to how every organization from our country down to our individual households run. In the US, our Constitution and body of laws and precedents serve as our platform for governance. In some industries == accounting, for example — self-governance serves as a proxy for regulation by others.

We are a knowledge-driven society

In their recent book The New Knowledge, university professors Blayne Haggart and Natasha Tusikov emphasize the importance of knowledge as the ultimate source of global economic and social power in the near future. “The creation and control of knowledge have become economic and social ends in themselves…A Knowledge-driven society requires that we pay particular focus to questions of knowledge governance.”

Knowledge is the master resource that has provided us with opportunities and value for five centuries, and will continue to do so — if we manage and steward it with the respect it requires and deserves.

The path is not alway easy or clear, though. We have now arrived at a moment when disinformation is both rampant and demonstrably corrosive to our democracy and our society as a whole. And AI is a key player in that. I’ve written that generative AI is potentially an industrial-strength disinformation machine — especially to the extent its sources are opaque (and, thereby, non-accountable). I’ve long believed that “sunlight is the best disinfectant” — meaning that trust (of anything) is largely based on transparency and accountability. At this moment, AI has little of either — and should be regarded with caution until this changes.

How transparent is AI?

Much of the narrative about generative AI has been driven by the major vendors — and is thereby subject to all the biases you might expect from anyone trying to sell you something. Some of the least biased — and most alarming — analyses of AI come out of academic research centers. One of these, the Stanford Center for Research on Foundation Models (CRFM), has just published a next-level analysis of transparency of foundation models (including ChatGPT and nine other LLMs).

CRFM’s working premise — like my own — is that “Transparency is an essential precondition for public accountability, scientific innovation, and effective governance of digital technologies. Without adequate transparency, stakeholders cannot understand foundation models, who [sic] they affect, and the impact they have on society.”

And the winner is — nobody

The CRFM study scores ten major FM vendors (including Meta, Google, and OpenAI) on one hundred quantitative variables to create a Foundation Model Transparency Index (FMTI). This index covers three high-level domains: upstream (the data, labor, and computing resources used to build the model), model-level (capabilities, risks, and evaluations of the model), and downstream (distribution channels, usage policies, and affected geographies.) These domains are direct analogues of what I call the knowledge value chains — the supply chain, the production chain, and the demand chain.

The CRFM findings confirm empirically what many of us have been saying intuitively — that top ten available models are each deficient in most aspects of transparency — scoring an average 37 of 100, with the highest score of any model being 54. They find that the lowest levels of transparency are in upstream resources — with three of the top ten vendors scoring 0 points on upstream transparency.

This means that the basic accountability for the data collection — the sites used, the labor employed, and so on — is not fully unavailable to outsiders (including potential customers). While the vendors claim this lapse is for competitive reasons, their critics claim that they are “looking the other way” on potential huge IP infringements — already the subject of hundreds of lawsuits. Whatever the reasons, the result to the user is that the models are largely black boxes from which little transparency emanates.

The current state of digital risk governance (DRG)

Researchers at The Conference Board (TCB), where I’m a Senior Fellow (though not involved with this study), recently surveyed over 1100 individuals regarding their use of generative AI for work. They found that, while 56% report using AI, only 26% have an AI policy currently in place at their employer. It is possible — given the amount of continual new revelations about AI, the litigation on its use, and the regulations and legislation currently enacted in more than 60 countries worldwide (though not yet the US) — that the picture of enterprise AI usage two or three years from now could look much different than it looks today.

Digital governance can no longer be separated from enterprise governance as a whole. Whether in reaction to, or anticipation of, regulation, companies must develop and deploy their own safeguards around AI and other emerging technologies. But they seem unevenly equipped to do so; CEOs also report to TCB that only about 50% of their board members fully understand the threats and opportunities offered by new technologies, including (but not limited to) AI.

What can organizations do?

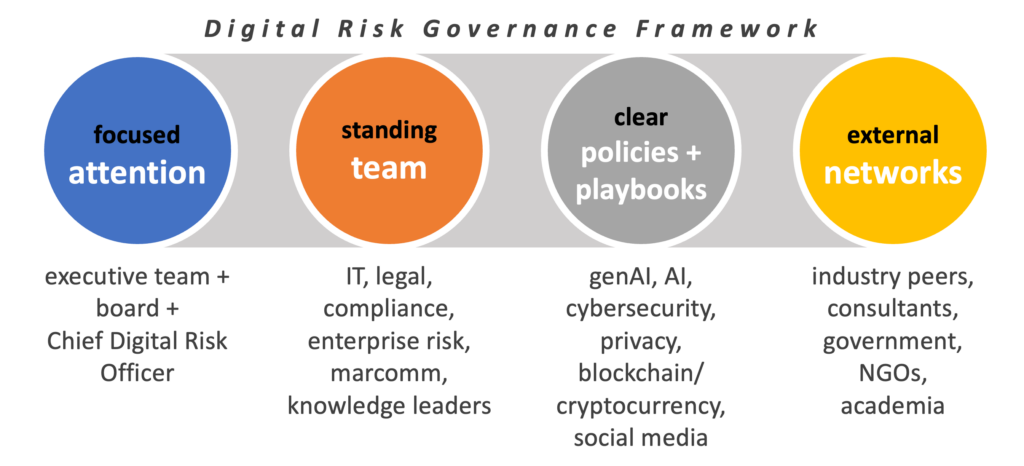

The rush of new technologies that hold great promise — but that companies neither fully grasp yet, nor respond effectively to — continues unabated. In addition to generative AI, these include other kinds of AI, cybersecurity and privacy, cryptocurrencies and other digital finance, and even social media and digital advertising…with others likely to soon follow. To respond effectively to these ongoing opportuniteis and threats, I’ve proposed a Digital Risk Governance (DRG) framework, as shown in the diagram. It consists of the following core elements:

- Attention — The focus of the executive team and the Board must be concentrated, with demonstrated expertise present on the Board. Ideally there will be a C-level executive, the Chief Digital Risk Officer (CDRO).

- Team — The CDRO should recruit and lead a standing team comprising representatives from IT, legal and compliance, enterprise risk, marketing and internal communications, and knowledge management.

- Policies and Playbooks — A set of core values and principles must be developed, supported by a scenario-based tactical response platform.

- Networks — Networked communities must be developed and nurtured. These include industry peers, outside consultants, government agencies, NGOs, and academia.

I discussed digital risk in November 2023 at KM World. If you’re interested in this, contact me and I’ll send you my slides, which I have revised since that event.

Comments RSS Feed