Knowledge Strategy, Organization and Management

Knowledge and Agency

“It’s not that we have too little knowledge; it’s that what we do have, we use so unwisely.” I’ve repurposed this epigram from the brilliant ancient Roman thinker Seneca to demonstrate why I pivoted my career from knowledge producer to knowledge strategist.

As always, I had much help from clients along the way. So often I’d heard the refrain to the effect, We buy lots of information — then do not use most of it, and/or don’t use it effectively — that I realized that this was a core widespread problem worthy of fixing. There’s even a book on it, The Knowing-Doing Gap by Jeffrey Pfeffer and Robert Sutton, which was most helpful to me. I rededicated my attention — and my career — to diagnosing and solving this problem.

Why knowledge?

There are said to be over one billion knowledge workers in the world. What are we all doing? What good do we do?

I’ve long been curious about this — so I observed my clients, spoke with many others, and ended up writing a book on it all. My work led me to one simple conclusion: The value of knowledge is agency — what you do with that knowledge. It’s nice to analyze and understand things — but the point is, to effect some positive change in them. We knew, but did not act in time is a common refrain in business (and other) disasters. Where knowledge produces no agency, no benefits result, no value is produced. (While noting that “value” is itself both contextual on a socio-economic continuum and dynamic, I’ll leave that discussion for another time.)

For knowledge to be anything more than nice-to-have, we must have a clear idea of the work that it accomplishes — and the results, outcomes, and impacts it has. My teams eventually included a workstream practice of having this conversation with clients before undertaking any research effort on their behalf: Why are we doing this? How will this make a difference?

An unavoidable byproduct of producing knowledge is the cost incurred in so doing. When knowledge provides no benefit, it’s financially categorized as an overhead expense — a sunk cost. Knowledge can be — should be — treated as an investment that provides a subsequent return — an ROI.

The knowledge value proposition

I personally experienced this effect years ago. I work often with digital media — audio and image — that require complex tools that are continually being updated. I’ve noticed that I never learn something so quickly as when I have a goal to accomplish that depends on that learning — a problem to be solved, for example. An online video or discussion thread — accompanied by a good dose of trial and error — serves, in many cases, as my primary training. And when something works, I usually reinforce it by writing some record of my insight — for example, a Post-It note stuck to the bottom of my screen. The value of knowledge is utility-driven — that which leads to a successful outcome is, by definition, high in value.

‘Agentic weighting‘ makes sense, too, in how we select professionals to help us. Sometimes in a doctor’s office you may see her diplomas and certificates mounted in frames on the wall — symbols of her knowledge. While these can be reassuring, it’s likely that you care less about them than about her agency — whether she understands and fixes whatever ailment brought you to her.

Much of my work develops and amplifies this simple (yet effective) principle, scaled up from the personal to the enterprise level. In doing so, I draw on techniques from both knowledge management and business strategy.

What is intelligence?

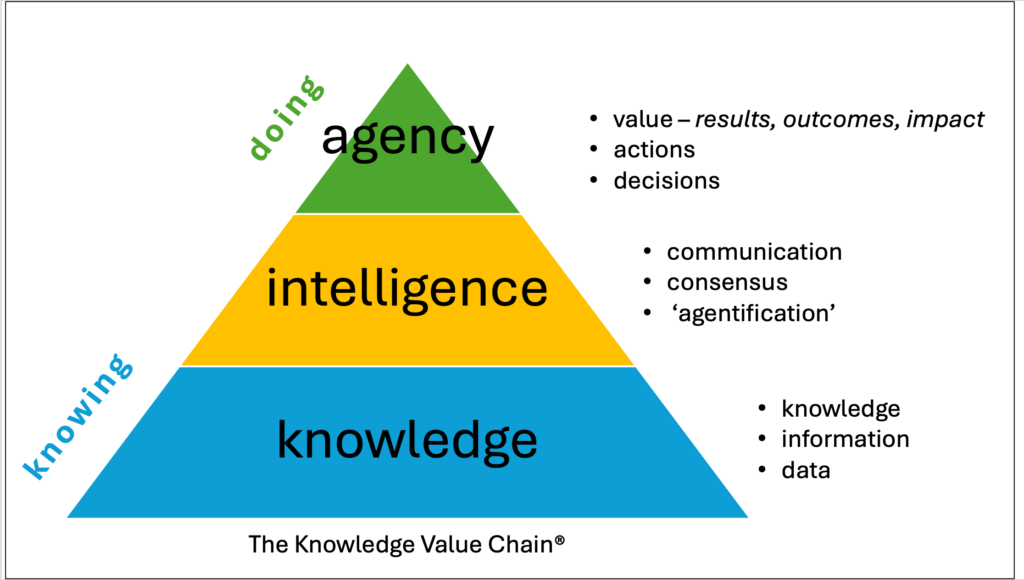

I first described the Knowledge Value Chain® model nearly three decades ago. It draws a clear distinction between knowledge — the transformations of data to information, knowledge, and intelligence, on the one hand — and agency — how you use that knowledge in making decisions, devising and executing actions, and (ultimately) producing enterprise value, on the other.

KNOWING is an essential first step toward action; AGENCY (‘doing’) is where the value is produced. The interface between knowledge and agency is INTELLIGENCE. Once knowledge has been socialized and ‘consensualized’ among enterprise leaders, it is deemed ‘agentic’ — ready to be acted upon.

The KVC is technology agnostic — it works in essentially the same ways whether the ‘intelligence’ is a group of people discussing options and making a group decision OR a group of data sets and computational algorithms (in effect) making that decision. Even when decisions are made algorithmically by machines, those algorithms are (at best) designed to capture the decisions as they would be made by well-informed expert humans. (The thing that first interested me in AI in the 1980s was an approach called ‘knowledge engineering,’ the goal of which was to capture and codify the decision criteria and processes of subject-matter experts.)

Many ‘decisions’ in life are essentially repetitive, rote, and automatic. For example, ‘when the temperature in the room gets down to 67, turn on the heat; when it gets up to 70, turn it off.’ Or ‘buy if FabCorp stock sinks to $25, sell if it rises to $45.’ There is a simple data point — room temperature, or stock price in these examples — and a simple responsive action — turn the heat up/down, sell/buy the stock.

Scaling to the enterprise

Organizations too — and the people in them — make simple, routine decisions that can safely and effectively be ‘canned’ and implemented automatically by machine logic. Machines can excel at this kind of thing, based on the super-human speed and scale at which they operate.

But even superficially ‘simple’ decisions are not always quite so simple. Even in the stock example, you might say — OK, am I selling the whole position if it hits 45 — or just a part — and if so, how big a part? From which tranche, earlier or more recent shares? Should I buy something else with the proceeds — or just leave it in an interest-bearing cash account? And so on…

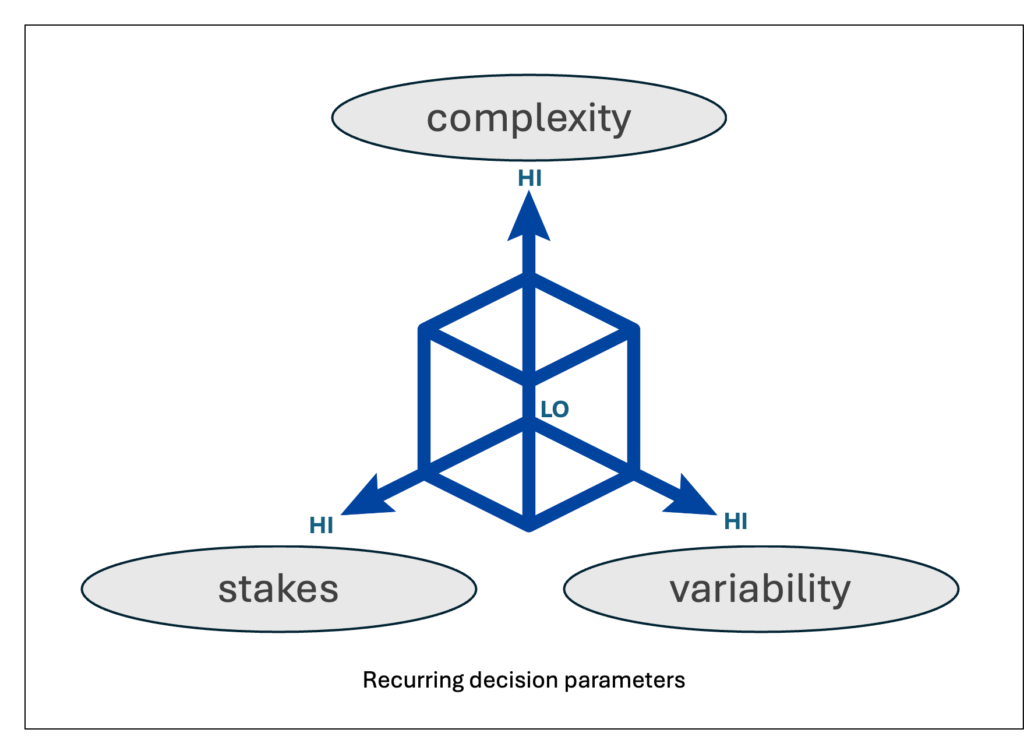

Most organizational decisions are significantly more complex than this — where sometimes not all the decision factors are evident (‘unknown unknowns’), and the ones that are known may be changing rapidly. The decisions that can best be canned and ‘botted’ are the ones that have low complexity, low variability, and low stakes (i.e., the cost of a wrong decision.)

Escalating agency

Algorithm-driven agency comes in two varieties, epistemic and physical. Epistemic agency consists of having an AI transmute one form of information into another, with minimal human intervention. For example, an agentic AI can transform a graphic Powerpoint slide into a spoken word presentation (or podcast); information in form A translates into information in form B.

Physical agency is the causing of an outcome in the physical world. Technologically, this is often a short leap beyond epistemic agency. Given the increasing integration of our physical and epistemic worlds, the ‘knowing-doing gap’ can seem narrow, nearly seamless. I push a few buttons on my computer, a day or so later an item I need shows up at my doorstep.

In our thermostat example, the epistemic event of triggering the thermostat causes a physical-world event with material consequences (i.e., turning up the furnace, which causes more fuel to be consumed, which causes my heating bill to rise). Here, both the complexity and stakes are relatively low.

A new realm

What if we raise the complexity and/or the stakes? We then enter another realm altogether, both intellectually and ethically. This is now technically possible due to the advanced state of robotics, and its linkages to AI. Suppose, for example, I ask a robotic weaponized drone to assassinate someone whose image I’ve uploaded. Such ‘Lethal Autonomous Weapons Systems’ (informally called ‘slaughterbots’) are being deployed in live ‘tests’ of the new warfare occurring in several of the world’s active regional wars.

This is living proof of Prof. Luciano Floridi’s memorable maxim to the effect that AI is the divorce of intelligence from agency. Under algorithmic control, decision making in any traditional (read: human) sense is bypassed (sacrificed?) in the service of speed and expediency.

With machines, this is always the case — and is core to their purpose. In my simple example, a thermostat ‘agentically’ turns up the heat — but cannot be said to ‘know’ the room’s temperature in any meaningful way. It’s just based on how the underlying sensor, whether digital or analog, is calibrated.

Bayesians and black swans

What about the critical third factor, variability? What if a complex, high-stakes decision becomes (in our view) less variable? — as in the progression: (1) we’ve done this before, (2) we do this frequently, (3) this is how we do it, (4) this is how it’s (best) done.

In such cases, it’s tempting to want to automate such decisions, in spite of two major drawbacks of algorithmic decision making:

- it’s Bayesian only to a limited extent — typically not adept at taking in new information in real time — and

- it’s stumped by ‘edge cases’ – emergent situations that have never been documented before (‘black swan events’), and are therefore not part of model’s training.

A Fortune 500 CEO was recently gunned down in broad daylight in midtown Manhattan, about two miles from where I write this. Companies are rapidly rethinking how they handle key-person physical security, internal communications, and even public affairs in such cases. But it’s unprecedented, there is little relevant past to extrapolate from — it’s a trifecta of high complexity, high variability, high stakes. The top thinkers of the enterprise are looped in. Whether decision-bots are, I’d love to know — but I doubt they’d have much productive to offer — in this or any number of the other significant decisions that organizations must face.

Whither human agency?

There exist hard decisions in life — ones that, given a choice, most people would prefer not to have to make. Take, for example, whom to assassinate. An AI bot may literally pull the trigger, but a human is involved at one or more steps. According to Pentagon rules, this includes a review prior to the moment of ‘agency’ that covers: Is is the correct targeted person? How confident do you need to be that it’s the right person? Under what conditions, i.e., with concern for collateral harm to non-targets? What criteria are used to target people in the first place? And so on…

The twist is that such a review must necessarily include the algorithm designers. These people are almost never physically present at the point of agency. But the criteria they set — baked deep into the model, hidden from scrutiny — determine the model’s output, ergo its agency.

A new Turing test?

You may be able to mechanize discrete, localized sections of a complex chain — but likely not the entire chain. In fact, I’d propose the following modernized Turing test for an agentic AI: when such an appliance can book my flight, hotel, and car rental, applying my miles and points to my optimum benefit, I’ll say they have something. Yes, including bag checks and seat assignments. In most real-world tests of ‘agentic AI’ systems, they immediately run into firewalls and ‘I’m not a robot’ Captchas — and are not able to proceed as desired.

With AI systems, there is talk of ‘humans being in the loop’ — sometimes as if that’s an inconvenient, if necessary, afterthought. This is mainly because most LLM-based systems are currently riddled with output errors, and in effect require human intervention (moderation, fact-checking, baby-sitting) — which in the training stage is usually performed by low-wage hourly workers in developing economies.

HITL is a good principle in theory. In practice, humans using AI have been observed in a range of settings to ‘cognitively offload’ decision making to machines. They are, in effect, attributing (whether consciously or not) some super-human level of authority to the machines.

The Man Who Saved the World

Let us be ever mindful that simulated decision making is just that: a simulacrum of the real deal. Yes, human decision making is biased and flawed in many ways. However, its flaws are only amplified, scaled, and empowered by its simulation. The consequences of this are potentially catastrophic. I’ve written previously about the two Boeing 737 crashes that were apparently caused by failed attempts at human overrides of an automated flight system.

An even more compelling example is that of Stanislav Petrov, “the man who saved the world.” On September 26, 1983, Petrov was the commander at a Russian nuclear response facility outside Moscow. At a time when US-Russian relations were especially tense, the (expensive, sophisticated) alert system picked up signals of five presumed-nuclear missiles incoming from the western US. Immediately notifying his superiors in Moscow could have triggered a major retaliatory response — and counter-response from the US, resulting in global thermonuclear war.

Instead, Colonel Petrov delayed such ‘agency’ for a few tense minutes until confirmation could be received by Russian radar. When no such confirmation was reported, he correctly concluded that the big, expensive warning system had reported in error.

He later attributed his ‘heroism’ to human intuition about factors likely not to have been present in the warning system’s algorithm. For example, any purported US first strike would likely have involved many more than five weapons.

Agency and accountability

There remains little room here for this crucial issue, beyond a simple mention. In short, agency usually implies accountability — which is closely related to legal liability. This is a crucial test in both criminal and civil law: Who is responsible for any damages resulting from an error in a machine-supported decision?

So far, courts have generally ruled that an AI agent or chatbot that makes an error can be held liable for that error, just as a human agent would be. There is likely to be a lot more testing of this crucial issue in the near future.

What’s in a name?

When I introduced my company decades ago, our main existential purpose was (and still is) to enable and amplify the agency of knowledge. One of my clients misunderstood our “knowledge agency” tagline as the name of our company. I get many of my best ideas from client conversations — so I tested it, and it stuck. It takes the abstract construct of “knowledge agency” and, by adding a “the” in front, turns it toward the more concrete. This happened organically, a luckily accidental play on the word “agency” — which, according to Merriam-Webster, means both “the capacity, condition, or state of acting or of exerting power” and “an establishment engaged in doing business for another.”

An advertising agency generates value by creating ads, thereby strengthening brands. An insurance agency protects value by selling insurance, thereby safeguarding assets. Following that line of analogy, a ‘knowledge agency’ is a force multiplier for enterprise epistemic resources: data, information, knowledge, and intelligence (DIKI). That is, in effect, our enterprise purpose.

Historical note

I always like to keep track of ‘where we are’ in the broad lifespan of knowledge. Knowledge has not always been valued by its agency. When knowledge was invented as a construct — by the Greeks in the 4th century BCE — knowledge was seen as having value in itself.

The useful idea of measuring the value of knowledge by what it can do evolved much later, with the Enlightenment in the 17th century. The famous slogan “knowledge is power” springs from Francis Bacon at that time — though there is speculation (since he wrote in Latin) that ‘knowledge + agency = power’ is closer to what he meant. That is, that knowledge is a means to power — the ability to effect things in the real world — rather than power itself.

The idea of attaching economic value to knowledge came much later during the mid-20th century, with the work of economist Fritz Machlup and others.

While I worry for our epistemic future, I am not at all worried about robots taking over. I am very worried about people so naive as to uncritically cede unwarranted power and agency to algorithms far beyond their understanding and control.

Comments RSS Feed