Big Data: Opportunity or Threat?

‘Big data’ wants to choke your organization. Don’t let it happen.

What is big data?

The McKinsey Global Institute report Big data: The next frontier for innovation, competition, and productivity (May 2011) is a relatively well-informed and hype-free description of the opportunities presented by Big Data. They define Big Data as “datasets whose size is beyond the ability of typical database software to capture, store, manage, and analyze.”

It’s important to note that they define it in terms of a functional capability (the last five words of their definition), rather than any absolute in terms of dataset size. If we can manage and analyze it, by their definition it’s no longer ‘big’.

I’d argue that McKinsey’s definition does not go far enough, and that we should actually be concerned with doing something value-productive with the resulting analysis — an argument I’ll develop further below.

How big data works

What we used to call ‘reality’ has now morphed from ‘analog reality’ to ‘digital reality’. Nearly everything has digital sensors on it — all this data goes into one big pool — it’s similar to what we used to call data warehousing, except this time on steroids.

Then various metrics are captured and analyzed for correlation. When certain factors move together with a certain level of statistical reliability, they are said to be ‘correlated’. It’s tempting to assume they are also related in some causal way — but this is often premature at best. What is needed in addition — and it’s a big methodological step — is a mechanism of causation.

Big Data is essentially a data-up approach. The economic business case for it rests on the fact that data collection and storage have become relatively inexpensive — often wholly automated through collection sensors, telecommunications, servers, and so on. The rationale continues that if it incurs little cost to collect, it’s best to collect it just in case — even if the reason for doing so is initially unclear or non-existent.

The hidden fallacy

This rationale behind Big Data sounds reasonable enough — but there’s a catch, a big one. The central problem with this logic is that it neglects to price in (i.e., it treats as ‘free’) the most costly and rare resource in the ‘knowledge value chain’ — the human attention and processing needed to convert the analyzed information into decisions and actions. Without that (so the KVC model says) there is little possibility of creating value, however that’s defined by your organization.

If the human processing element were built into the equation, the ROI would look much different. Then it would become clear that just because you CAN gather some data, does not necessarily mean you SHOULD from a cost-effectiveness point of view.

The opportunity

Opportunity and threat often come together — like two sides of the same coin. An opportunity not taken can become a threat, especially when a rival takes it and runs with it. Conversely, a threat mitigated or avoided can become an opportunity.

Big Data is an opportunity to the extent we manage and master it — and a threat if we don’t.

The opportunity — as described by McKinsey — is in “enhancing the productivity and competitiveness of companies and the public sector and creating substantial economic surplus for consumers.” They claim, for example, that US Health Care could capture additional value (i.e., reduce costs and/or generate revenues) — a benefit of $333 billion per year, half of which would come from more effective use of clinical data (for example, for research on the comparative effectiveness of various medical treatments.)

The challenge

Sounds miraculous — is there a downside? There is, and McKinsey is forthcoming on that too. In Health Care (one of five economic sectors where they claim there is the biggest opportunity), they note that “the incentives to leverage big data…are often out of alignment…[necessitating] sector-wide interventions…to capture value.”

I’ll amplify that based on case experience. At TKA we’ve seen examples where, for example, hospital systems did not want to share clinical data with other systems because they see having such data as a competitive advantage, and the sharing of it as creating a potential threat. The barriers are only partly technological (in terms of the relatively nascent state of digital clinical records) — but also natural responses to perceived competitive threats.

In Government (another area of opportunity), McKinsey notes that “Governments have access to large pools of digital data but, in general, have hardly begun to take advantage of the powerful ways in which they could use this information to improve performance and transparency.”

The human factor

One gets the impression that the problem is not after all with the data or even with the technology to use it — but with the perceived ‘value proposition’ in using it. Seen through the lens of the KVC framework, the problem comes from the top of the KVC, not the bottom — as is often the case.

This is consistent with McKinsey’s assessment that “leveraging big data fundamentally requires both IT investments (in storage and processing power) and managerial innovation.” They point out, for example, that lack of personnel trained in data analysis could be a major barrier. That pesky human factor again…

The threat

The threat is that in not being able to process the data sufficiently, we will allow it to become digital noise that (paradoxically) renders sense-making even more difficult. Data undirected and unused becomes a distraction.

The McKinsey report alludes to this, stating that “human beings may have limits in their ability to consume and understand big data.” My only objection is that they handle this almost parenthetically — as a sidebar, and with a hedge: “MAY have limits…”

Of course human beings have cognitive limits! And of course human beings remain as the decision-making heads of all organizations! (We have not yet seen the algorithmically-governed robo-corp.) This attention bottleneck has been described by observers from George Miller (“The Magical Number Seven, Plus or Minus Two”) to Tom Davenport (The Attention Economy) to Herbert Simon (“Designing Organizations for an Information-Rich World“).

Data blindness

The typical result is information overload, a condition being experienced by many complex organizations, and documented in recent books such as Frank and Magnone’s excellent Drinking From the Fire Hose. But information overload is the symptom, not the root problem. In my experience, clients love data and need it to make optimal decisions. They’ll never complain of too much of the right stuff. When organizational leaders complain of ‘too much data’, what they’re really describing is ‘too much data of the wrong kind and/or that we cannot use effectively’.

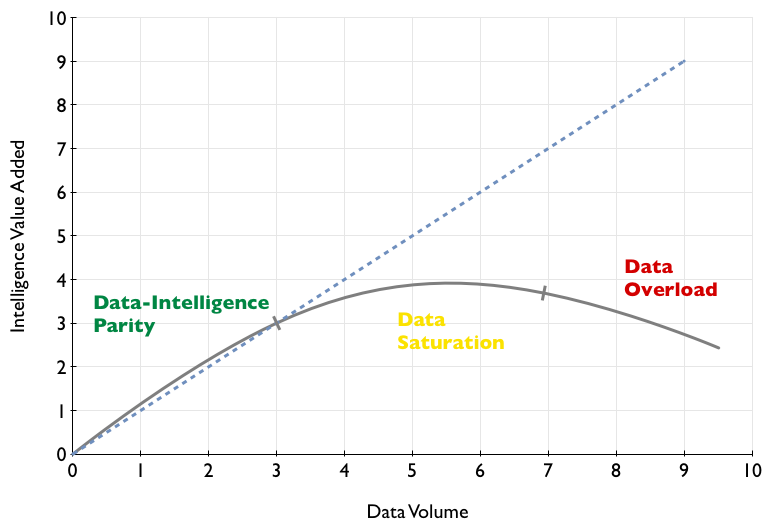

The problem is that in our measuremania we run the short-term risk of failing to distinguish between the meaningful data and the noise, and the longer-term risk of losing our capability to readily draw this distinction. We become permanently data-saturated or -overloaded, as illustrated above.

The problem is that in our measuremania we run the short-term risk of failing to distinguish between the meaningful data and the noise, and the longer-term risk of losing our capability to readily draw this distinction. We become permanently data-saturated or -overloaded, as illustrated above.

Prolonged and extreme data overload can result in data blindness, where the glut of noisy pseudo-data clogs, distorts, and even shuts down the perceptual channels within the organization.

In short, we’re rapidly leaving the Age of Information, and entering the Age of Too Much Information. As in Herbert Simon’s words, “A wealth of information creates a poverty of attention…”

The solution: directed data

The rest of Simon’s quote above reads, “…and a need to allocate that attention efficiently among the overabundance of information sources that might consume it.”

Efficient allocation of attention…exactly! (He was writing this more than four decades ago.) I’d only add “effective” to the prescription — efficient and effective allocation of attention.

How can we operationalize this? Let’s start with, don’t just collect stuff and put it into your organization’s data warehouse — which is often more like a data ocean — hoping that someday, some way it will prove meaningful. You are overlooking a non-trivial element of the cost to do so (human attention), and the resulting fully-costed ROI will not be what you intended.

Dealing with non-relevant data thus collected can actually sap higher-level analytic resources, with the result that those are unavailable where needed. If you spend resources (time, effort, attention, and money) looking at things that don’t matter to your competitive strategy and tactics, you’re likely to run short of resources to look at things that do matter — thereby lapsing into Simon’s poverty of attention.

You’ll lose the signal, and drown in the noise.

Your approach to Big Data needs to be top-down — not bottom-up — to be most efficient and effective. It needs to have as its focus data that is directed to answer key questions and/or test key hypotheses about the economic environment and your competitive standing within that environment. This requires you to make strategic choices in advance of the execution of the process.

[…] what Big Data is? This blog by Tim Powell is a pretty good summary of the challenges. I particularly like this statement by Powell regarding what’s missing in many Big Data […]